Major migrations for mature software projects can be challenging, but, you shouldn’t be afraid to do so. Migration depends on your main concern, whether you want to migrate specific technologies. As the company grows it becomes more important to adopt things like automation. There comes a point where you will be focusing on reliability. Your customer is more important than delivering the next piece of functionality.

# Background

WordPress, you might hear about this either from the blogging/SEO community or from a PHP guy. What about the rest two, EC2 and EKS? I heard about WordPress when I started blogging in 2016-2017. My initial blog mine was also based on WordPress. WordPress is a popular free and open-source content management system. WordPress is used by 43.2% of all websites on the internet. I need to host this site on the cloud. I got a few insights about EC2 though I took an option on CPanel with shared hosting. Later on, I migrated my WordPress site to JAMStack. And what about the EKS? If I ever told you the first managed service for Kubernetes, it was EKS. The exposure I got to CNCF space was a year ago. EKS was a starter pack for me for managing the Kubernetes resource at the production. Today I will be sharing how I had the migration of the WordPress site hosted on EC2 to EKS with real-time users of 150k.

You can also check out how to deploy WordPress on lightsail of my previous blog. Trust me moving your site to a new cloud service or new provider is a bit like packing up yourself with your requirements to new places in a short time with investment and of course time. It’s always challenging because you don’t know where to start, you experiment, lost on the way, and finally went to the final destination.

# Migration

Here, we discuss the high-level overview of the migration part. We won’t go into the code base and explain step by step. You can at least know the patterns, tools and stack might be different if you are planning too.

The lesson I gained so far via engaging with modern tools and technologies to solve the problem. You have to realize that one tool does not cover all the requirements. The key is always composition. I won’t be discussing why the heck WordPress needs to migrate. I will write a blog about why Kubernetes and why you shouldn’t use the case.

Here is the overview of the tools we will use on our own.

Pre-requisites

- Docker: Containerization

- WordPress: CMS system (our application)

- Terraform & its account: Infrastructure as Code

- Cloudflare: DNS

- AWS account: an on-demand cloud computing platform

- kubectl & helm: Interaction cli for your k8s cluster & package manager

- Gitlab: CI/CD platform

- Many more

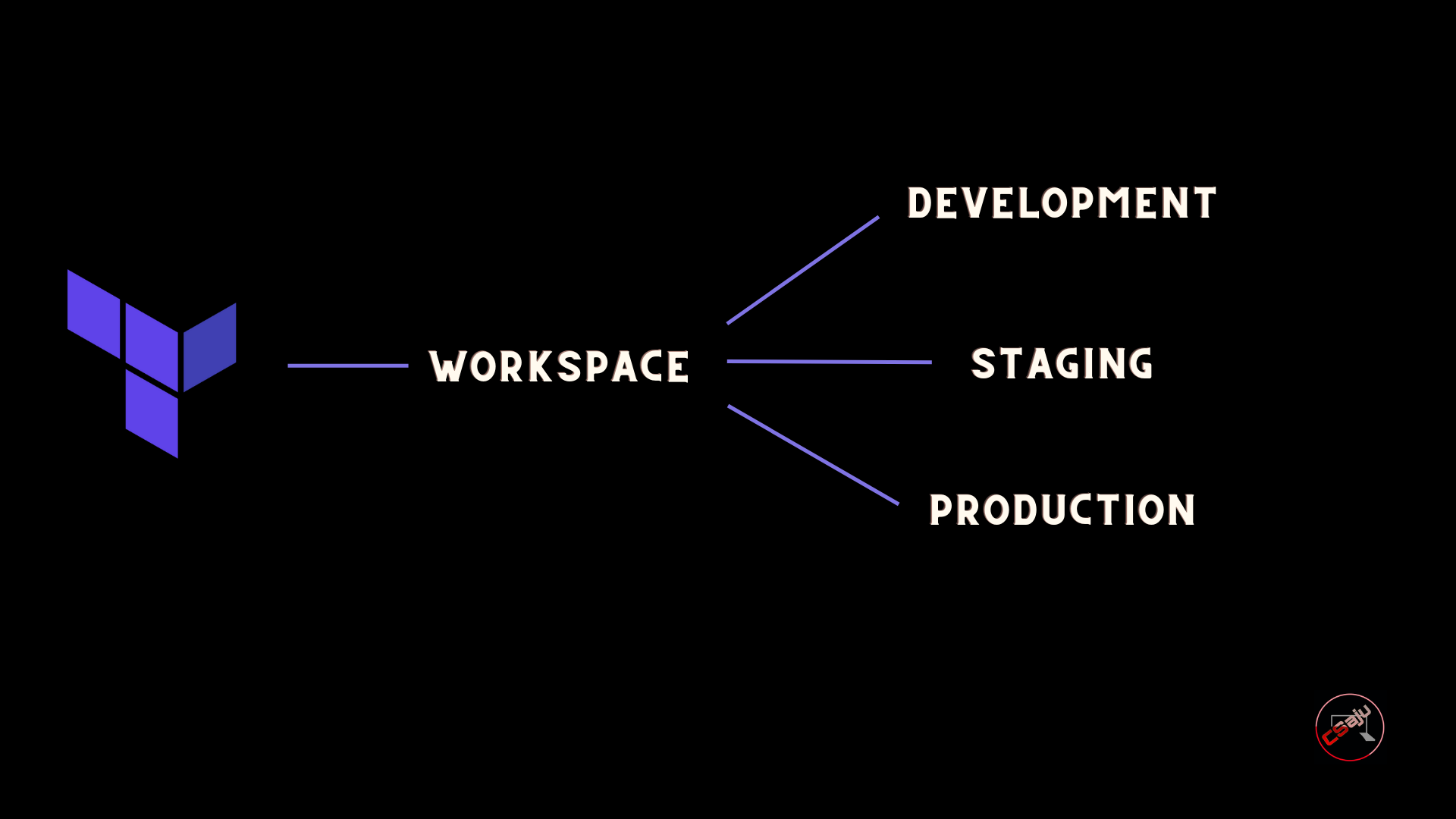

Doing one is easy and manageable. Automating it is hard. The infrastructure of the AWS resources is being set up via terraform cloud.

Understanding the application and its nature is necessary before carrying out the migration.

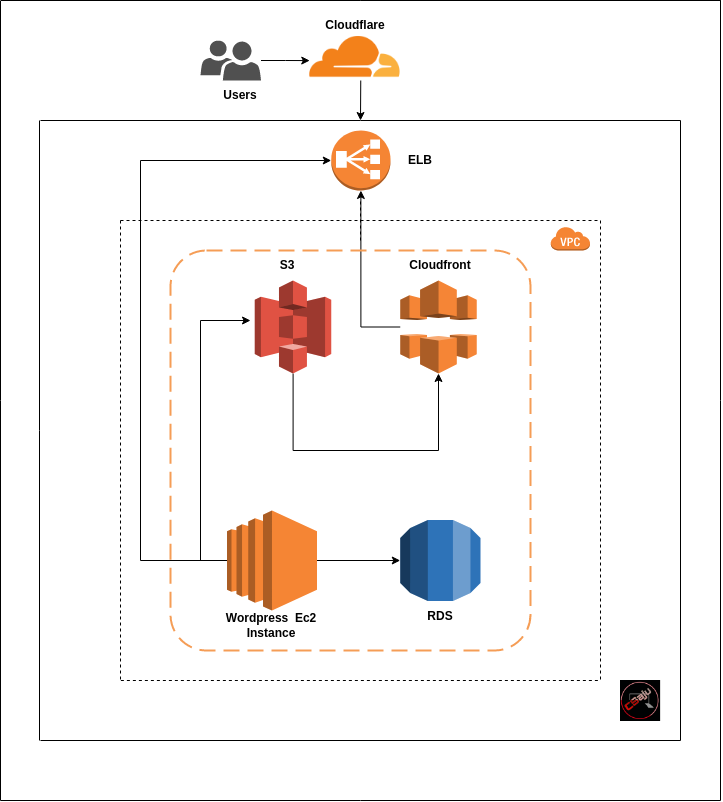

Here is the architecture of Existed WordPress application hosted on EC2.

The reality is disappointing that many tech migrations fail or take much longer than expected.

Kubernetes has great potential not only for scaling one. It also provides high availability by implementing self-healing components. I had set up my local cluster and experimented with it kind of being a bare metal service via minikube. I figured out to configure the spin-up of the cluster via launching three EC2 instances. With the help of kubeadm joining the worker node with the master node, might be a good way to spin up the cluster. Security group for the Kube-API server, etcd server, Kube-scheduler, etc need to configure. It makes it way more complicated than expected. Also managing the bare metal k8s cluster is difficult later on. Choosing the EKS, managed Kubernetes service might be a good option. It handles every stuff from upgrading to managing minor changes without having headaches.

Fast forward, setting up the k8s cluster was done via terraform. It also runs out the serviceaccount Kubernetes object as “aws-load-balancer-controller”. IaC workspace for the k8s cluster also allows the node security group to access the RDS too.

I approached codebase to containerize via docker for the WordPress site. So I can push it on the private GitLab docker registry. In the case of consideration, while containerization the codebase, I assembled WP packages. Every commit will be triggered out on the respective branch where GitLab will push a new image. The image will contain the custom plugins and other files that avoided the need for PVC.

Note to be taken out: it impacts how you handle WP version upgrades and changes to your code and upgrading one.

FROM bitnami/wordpress:some-version-tag

# Label and credit

LABEL \

maintainer "Name"\

description = "Something here"

# Restrict Xmlrpc POST method

COPY .htaccess /opt/bitnami/wordpress/

# Copy out the themes and plugins

COPY themes/index.php /opt/bitnami/wordpress/wp-content/themes/

RUN mkdir /opt/bitnami/wordpress/wp-content/themes/custom-theme

COPY themes/ap-theme /opt/bitnami/wordpress/wp-content/themes/custom-theme

COPY plugins/custom-plugins.zip /opt/bitnami/wp-cli/.cache/plugin/custom-plugins.zip

COPY scripts/entrypoint.sh /opt/bitnami/scripts/wordpress/entrypoint.sh

COPY scripts/run.sh /opt/bitnami/scripts/apache/run.sh

COPY scripts/libwordpress.sh /opt/bitnami/scripts/libwordpress.sh

entrypoint.sh load out the libraries and installed them inside the cluster’s pod. It will also activate the custom plugin via wp cli on the script.

wp plugin install /opt/bitnami/wp-cli/.cache/plugin/your-custom-plugin.zip --activate --force

Keeping on a note we do have our custom themes for WordPress, so we also copied from the source. libwordpress.sh will load database libraries and validate settings in WORDPRESS_* env vars. It includes user inputs, database configuration, SMTP credentials, s3 credentials, and many more. Once everything goes well, run.sh would start to run the apache environment.

A little bit about security-wise, here custom .htaccess is being sent over the container file.

# Restrict Xmlrpc POST method

COPY .htaccess /opt/bitnami/wordpress/

In my previous, I shared about the Exploitation and prevention of enabled xmlrpc too. To get connected to the RDS instance via Bastion host and dumped the database. Bastion Host is like a door in our house [ VPC], where we need to secure it but still make it accessible for people to go in.

mysqldump -uDB_USERNAME -p DB_NAME > exported_DB_NAME.sql

About the CI process, it will get triggered with every commit via developer on the GitLab one. In GitLab CI, the script will export our aws credentials via global environment variables. It fetches out the credentials referencing from the aws parameter store. Via this, we can get the kubeconfig for accessing the eks cluster on the CI. I used helm to deploy the whole containerized WordPress app and rollout, and rollback too. Helm charts come with pre-configured app installations that can be deployed with a few simple commands.

fetch_credentials(){

}

export_kubeconfig(){

}

deploy(){

helm upgrade --install ${APPLICATION_NAME} . \

-f values/values.${CI_ENVIRONMENT_NAME}.yaml \

--set image.repository=${CI_REGISTRY_IMAGE} \

--set image.tag=$CI_COMMIT_SHA \

-n $CI_ENVIRONMENT_NAME \

}

kubectl rollout status deploy/${APPLICATION_NAME} -n $CI_ENVIRONMENT_NAME

If we need to rollback to the previous commit we have a script to rollout via

helm rollback ${APPLICATION_NAME} 0 -n ${CI_ENVIRONMENT_NAME}

For the assets files to be synced up in the newly created s3 buckets aws cli is being used for it via exporting aws credentials locally.

aws s3 cp <your directory path of assets > s3://<your bucket name>/ --recursive

make sure you have set up the IAM policy properly so that aws bucket won’t be a troubling issue while uploading.

To list out the media files, you can just do it via aws cli.

aws s3 ls s3://atasync1/

To manage the assets in wordpress dashboard via s3, I used Media cloud . It configures aws credentials which are being done on the ci process via libwordpress.sh. Via this plugin, every newly assets are being uploaded on the storage bucket.

# Configure SMTP credentials

wordpress_conf_set "AWS_SES_USER" "$WORDPRESS_SMTP_USER"

wordpress_conf_set "AWS_SES_PASSWORD" "$WORDPRESS_SMTP_PASSWORD"

wordpress_conf_set "AWS_SES_HOST" "$WORDPRESS_SMTP_HOST"

wordpress_conf_set "AWS_SES_PORT" "$WORDPRESS_SMTP_PORT_NUMBER"

wordpress_conf_set "AWS_SES_PROTOCOL" "$WORDPRESS_SMTP_PROTOCOL"

# Configure S3 credentials

wordpress_conf_set "ILAB_AWS_S3_BUCKET" "$ILAB_AWS_S3_BUCKET"

wordpress_conf_set "ILAB_AWS_S3_ACCESS_KEY" "$ILAB_AWS_S3_ACCESS_KEY"

wordpress_conf_set "ILAB_AWS_S3_ACCESS_SECRET" "$ILAB_AWS_S3_ACCESS_SECRET"

wordpress_conf_set "ILAB_AWS_S3_REGION" "$ILAB_AWS_S3_REGION

These credentials are fetched via the aws parameter store during the runtime build process on the Gitlab CI/CD pipeline.

Importing the database SQL file is done via the bastion host. Before performing it, we have to set up a few things on the newly created RDS.

CREATE DATABASE 'MY_DATABASE';

CREATE USER 'newuser'@'%' IDENTIFIED BY 'super_random_pasword';

GRANT ALL PRIVILEGES ON MY_DATABASE. * TO 'newuser'@'%';

FLUSH PRIVILEGES;

After creating the new user account, SQL is imported on the bastion host where SQL files are ready for importing the process.

mysql -h host-url -u username -p database_name < file.sql

About .gitlab-ci.yml, we will have many stages . It ships the container and deploy it on the eks cluster. Gitlab runner can able to execute the above respective scripts on respective stages.

Test your application by port-forwarding and verifying on the browser.

kubectl port-forward pod-name -n your_namespace 80:80

The home page might show blank if you are trying to have a default custom theme, all you need to log in and select it. For updating the DNS value, making sure respective teams to get noticed them. Hence, gracefully update during the least user traffic hour. That’s how migration was successfully done.

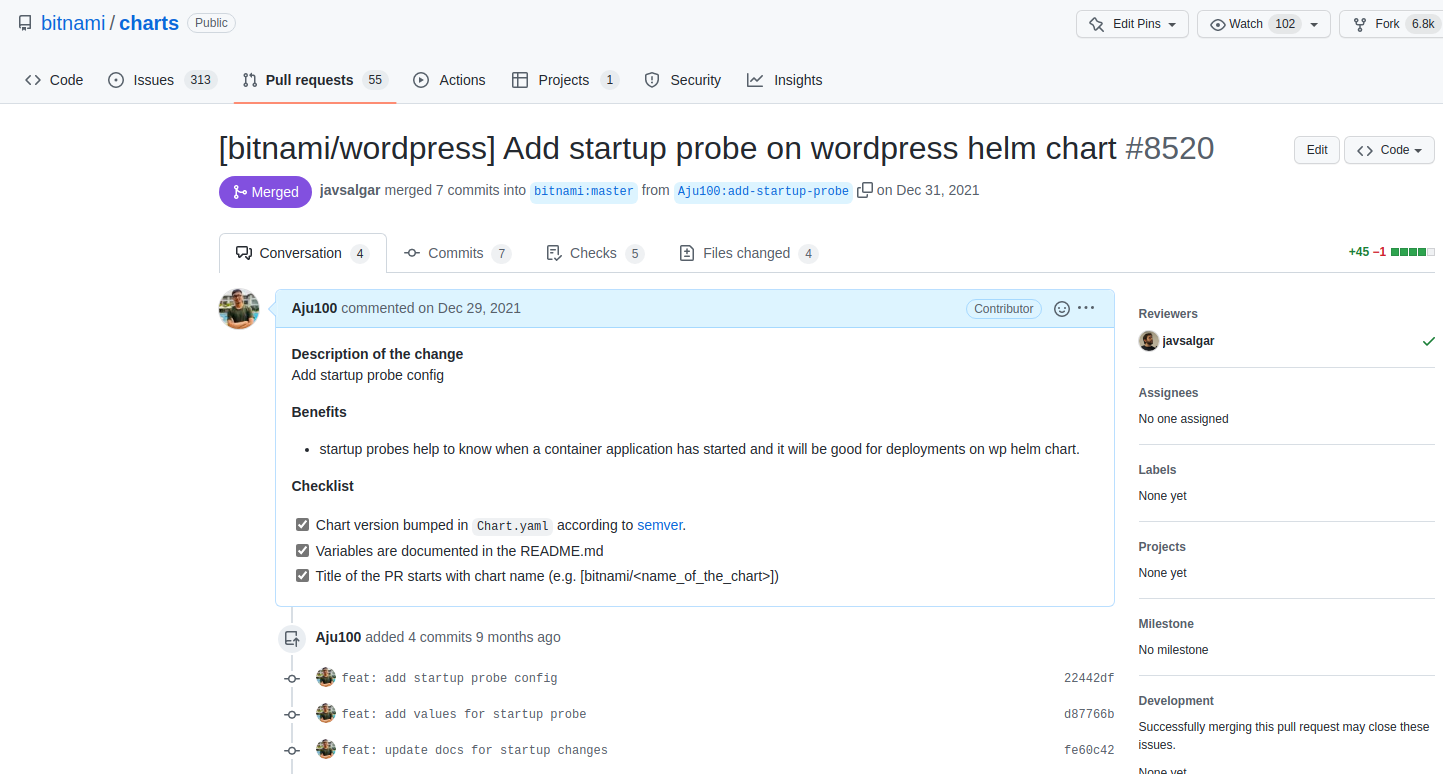

During the migration journey, I did an open-source contribution too. As I was referenced from the open-source WordPress helm chart from bitnami. There was a lack of configuration for the health check(startup Probe). Since, it was not updated on the current bitnami helm chart. I created the issue and opened up the pull request which was eventually merged. Here is the URL of that PR too. https://github.com/bitnami/charts/pull/8520#event-5830766834

By the way, it was welcoming and helped me to solve this issue. It was an amazing experience in the open-source community.

# Conclusion

With that, we’ve walked through the whole process from managing the k8s cluster to the ci cd process. I wouldn’t say it’s the easiest process and Migrating a website is complex. But it gives you a chance to dive into a bit of how the migrations process was being done for wordpress on to EKS cluster.

Thanks so much for reading and I’d love to hear any feedback you have, please ping me up on Twitter or Linkedin.